Fast.ai Lesson 1: Summary, notes and how I trained my first AI model in 10 minutes.

After ignoring the AI hype for last couple of years , I finally took the plunge to start learning it. I started with the fast.ai course (Accessible here for free) which is absolutely great for beginners. So, let’s dive into the world of deep learning with lesson 1 -

The good things about fast.ai course

It’s led by Jeremy Howard, one of the most respected voices in AI/ML.

You don’t need to spend months on math before touching code. You train a model in lesson 1 itself.

The Fast.ai forums are one of the most active, helpful online learning communities out there.

The course has some seriously impressive alumni — people who went on to found startups or work at great AI-Labs like OpenAI, Google, Nvidia, etc.

Basically, it’s not just “another AI course.” It’s built for doers.

Key concepts

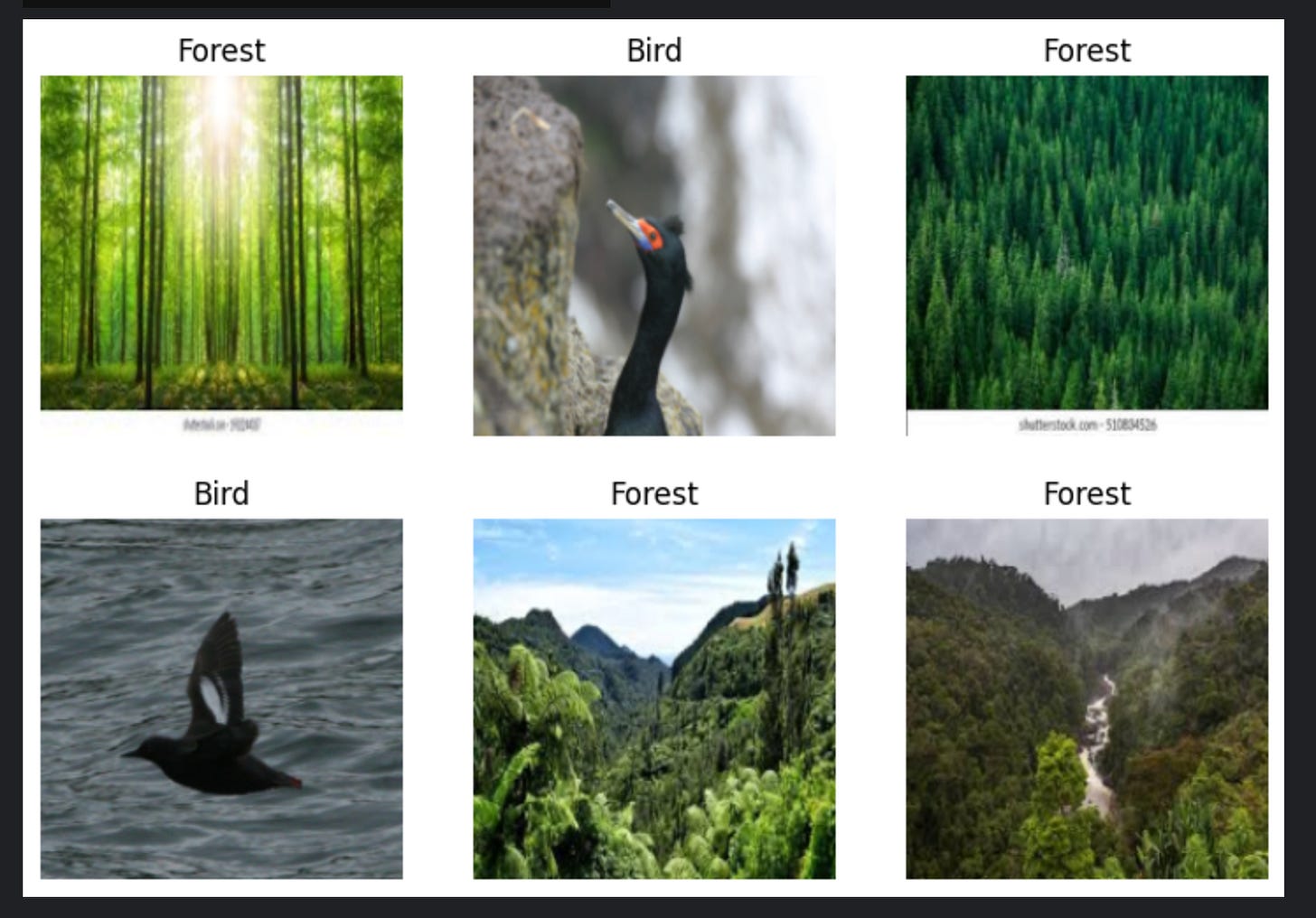

The first lesson introduces a newbie to the exciting world of AI/ML. After quick introductions the lesson starts on training the first deep learning model on classifying the images i.e. Categorising the pictures of birds and forest.

What surprised me:

Pre-trained models: You don’t start from scratch. Think of it like renting a furnished apartment—you just add your own posters and curtains (fine-tune with your data) and boom, you’re home.

Small data is enough: Forget gigabytes. We trained our first model with just 500 images and still hit more than 99% accuracy. That’s the moment you realize: oh wow, you don’t need to be google to get trainable data.

The Technical Stuff

How training a model works

Traditionally, a computer program is like a super-obedient intern: you spell out every step, it follows. No questions asked.

Training a model? Whole different game. Instead of instructions, you give it some random guesses (weights). The model tries a task, messes up, and you calculate just how badly it failed using a loss function. Then, you politely tell it:

“Okay buddy, adjust those weights so you embarrass yourself slightly less next time.”

Repeat this cycle a bunch of times (epochs), and eventually your model goes from “clueless intern” to “pretty competent junior engineer.”

(Note: it’s not just throwing random darts—there’s a method to how weights are updated. We’ll get to that in future lessons.)

The fastai library is built on the pytorch ( The open source library from facebook). We are using jupyter notebooks to write and test our code, which are fantastic tools for interactive development. The main class introduced in the first chapter is DataBlock.A DataBlock takes five important parameter for training a model -

Blocks - Inputs and outputs. In our case, we are feeding the images (

ImageBlock) and expecting a bird or forest category (CategoryBlock).get_items - this takes a function that tells DataBlock how to fetch the items ( the bird and forest images in our case). You can pass a function which returns the images or fastai provide a build-in function

get_image_fileswhich can get all the images from the given path.get_y - this takes a function that tells which images belongs to which category. You can write a custom function or use the built-in

get_parent which returns the folder name in which the image resides.Splitter - This is one of the most important arguments which a lot of other libraries miss. This splits the training images in two parts (Train set and validation set). The model is trained on the train and tested on the validation set.

item_tfms - This transforms the items (images in our case) such that it’s easy to operate on them in batches. Let’s say all the images are in different sizes or formats, it’s difficult to process them together. Item transformations creates them in a standard way so that it’s easy to process them together using the power of GPUs which can run a lot of multiple tasks in parallel. We can use Resize, crop, padding etc. to make the images uniform.

DataBlock is one of the most important classes in fastai library. I would definitely recommend you to check the official DataBlock documentation and it’s dedicated tutorial on fast.ai blog.

Then we create the Dataloaders using the DataBlock we just created and pass the dataloaders to the model for fine-tuning it.

dls = DataBlock(

blocks=(ImageBlock, CategoryBlock),

get_items=get_image_files,

splitter=RandomSplitter(valid_pct=0.2, seed=42),

get_y= parent_label,

item_tfms=[Resize(192, method='squish')]

).dataloaders(training_image_dir , bs=32)

dls.show_batch(max_n=6)

learn = vision_learner(dls, resnet18, metrics=error_rate)

learn.fine_tune(3)A Crucial Note on Data Splitting

While training a model, we need to keep some data separate so the model doesn't see it during training. We use this "validation set" to check the model's accuracy after training is finished. Keeping a validation set is so important that fast.ai won't let you train a model without one.

Generally, we use a

RandomSplitterto split the data, but you must be careful. For example, if you are training a model to predict future sales based on past sales, your validation set must be the most recent data (e.g., the last few months). Choosing random sales data for validation would not accurately test the model's predictive power on future outcomes.

Why Just fine-tuning the already trained models is sufficient

Why don't we need to train models from scratch? And why does a model trained on one dataset work so well on completely different data after just a few minutes of fine-tuning?

When we inspect the layers of a trained neural network, we find that the first layers learn to detect basic features like vertical, horizontal, and diagonal edges. Subsequent layers combine these to find more complex patterns like corners, repeating textures, and circular shapes. These fundamental visual elements are universal.

Any new model trained from scratch would just learn these same basic features again. By fine-tuning a pre-trained model, we are simply adjusting the final few layers to specialize in our specific task. This is why it's so fast and effective.

Things that broke down

Getting Data - Since the launch of OpenAI’s ChatGPT, companies have been very particular about sharing their data. In the course, Jeremy uses DuckDuckGo search engine to collect random photos of birds and forests, which starts throwing 403 Ratelimit Error. I tried the famous ImageNet database to get some images but lately, their APIs are down more than half the time. At last, I start working with the Kaggle datasets only.

Older Versions - The course was launched in 2022 and a lot has changed in the field of AI/ML since then. The notebook code provided by jeremy does not work if you try to copy the notebook and run it straightaway. We can fix this by pinning the version used at that time or can change to code to handle the updates in the libraries.

My Experiments

After building the Bird vs Forest model suggested in the book, I started working on the project of my own. I started with an Indian ethnic dresses classifier which had 16 categories but the code to handle this was exactly the same. The main issue was to train the model on large data which was taking a lot of time.

This was a rookie mistake. I ran the notebook in Kaggle using the standard CPU, which takes a lot of time for a large dataset for each epoch. I did not know that I can get up to 30 hours/week of GPU time free on kaggle. Later I discovered that while tinkering with settings and voila, I was blown away with the GPU speed.

You can access my “Which Dress” Notebook here. Also, you can access datast and categories here.

Bonus Project

A lot of times, we have all the data in the csv. The great thing about Dataloaders is that you can create one directly from your csv dataset. I build a Book genre predictor by cover image by loading the Images in Dataloaders directly from CSV.

dls = ImageDataLoaders.from_df(

training_images,

path=img_path,

fn_col='Filename',

label_col='Category',

valid_pct=0.2,

seed=78,

item_tfms=Resize(224),

batch_tfms=aug_transforms(2))You can check the whole code on Book genre predictor in this notebook.

Wrap up

Well, that’s a wrap for lesson 1 -

Fast.ai gets you hands-on fast, without waiting months to touch code.

Fine-tuning pretrained models is insanely powerful.

Even with small data, you can get production-quality results.

Let me know what how I am doing and what can I improve in the upcoming articles? Also, let us know what did you pick for your first model training and what issues did you face?

According to Jeremy Howard, A lot of students drop the course after or during the 3rd lecture. Check out my Fast.ai Chapter 0: Unofficial - Why Most People Quit Fast.ai at Chapter 4 (and How You Won’t).

P.S. If you enjoyed this post (or at least chuckled once), consider subscribing to my mailing list. I’ll be writing one blog for each chapter of the Fast.ai course, along with my experiments, as wells as on career advice and startups.

Don’t worry—I won’t spam you with cat memes… unless you’re into that 🐱. Just practical insights, resources, and maybe a joke or two to keep things fun.

I am sure this is going to be super helpful. I did the first lesson once, sometime back, and gave up. Mustering up the courage to start again after doing some courses :) Thank you once again for the insightful and endearing post.